In this work,

accepted to ICLR 2021, we study a

well-established neurobiological network motif from the fruit fly brain and investigate the possibility of

reusing its architecture for solving common natural language processing (NLP) tasks. Specifically, we focus

on the network of Kenyon cells (KCs) in the mushroom

body of the fruit fly brain. This

network was trained on a large corpus of text and tested on common NLP tasks. Biologically, this network

evolved to process sensory stimuli from olfaction, vision, and other modalities and not to understand

language. It, however, uses a general-purpose algorithm to embed inputs

(from different modalities) into a high dimensional space so that these embeddings are locality sensitive

In our network, the semantic meanings of words and their textual contexts are encoded in

the pattern of activations of the KCs. It is convenient to represent this pattern of activations as a binary

vector, where the active KCs are assigned the state 1 and the inactive KCs are assigned the state 0. Thus,

our

word embeddings, which we call FlyVec, are represented by boolean,

logical

vectors, in contrast to conventional continuous word embeddings like Word2vec

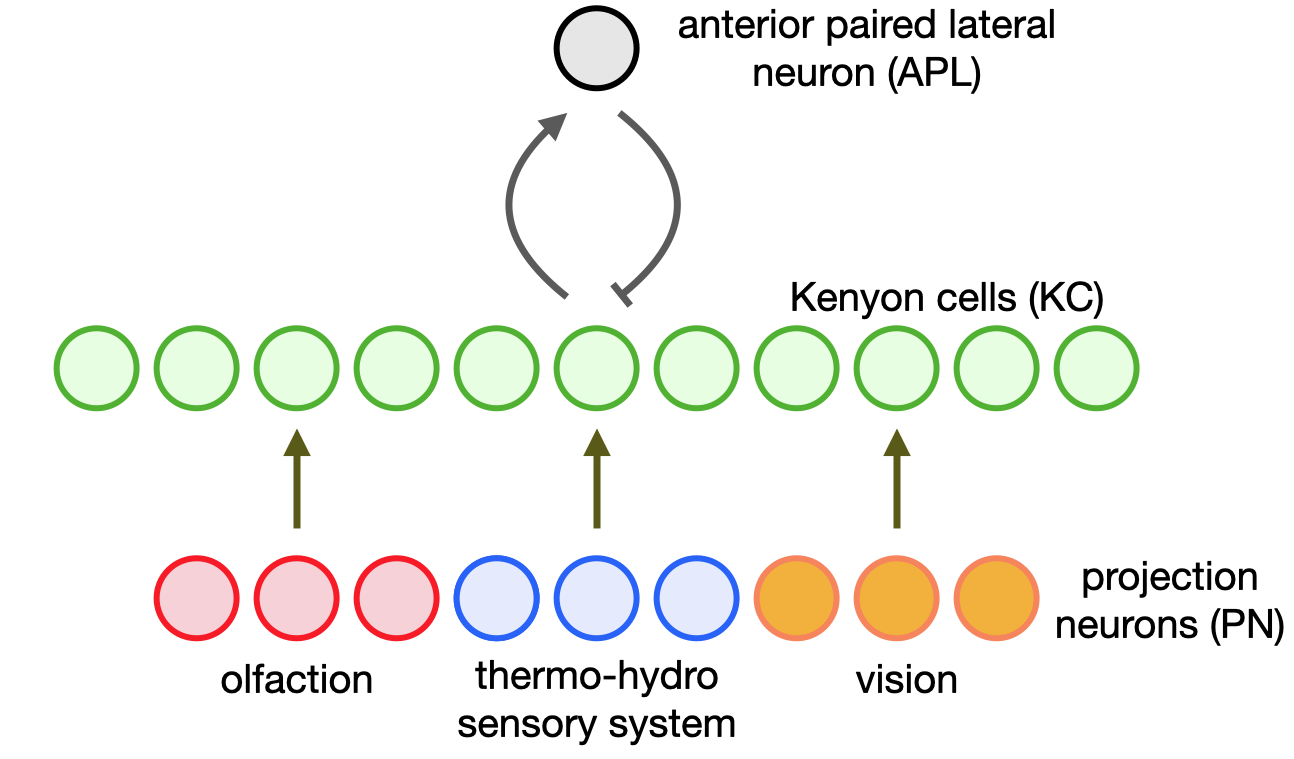

Biological Network

The mushroom body is a major area of the brain that is responsible for processing sensory information in

fruit

flies. It receives inputs from a set of projection neurons (PN) conveying information from several sensory

modalities. The major modality is olfaction

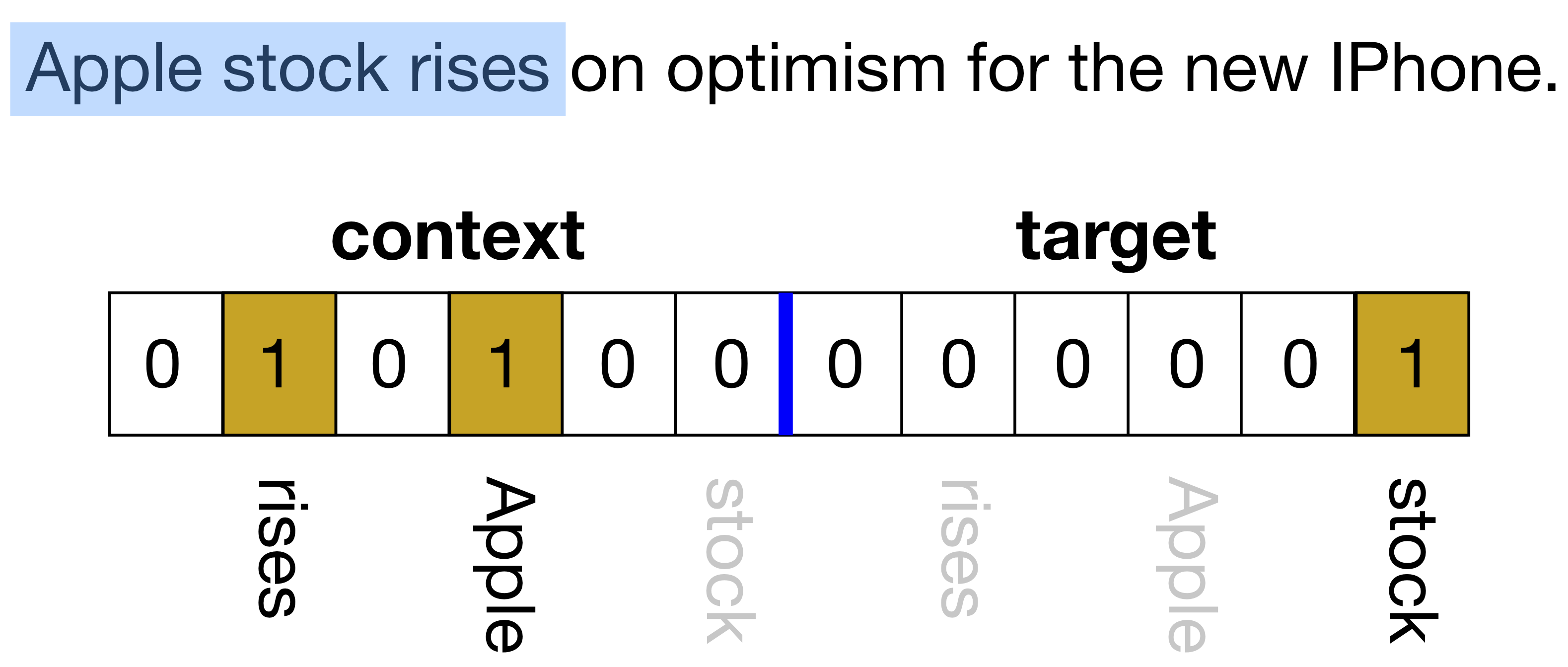

Training the Network

We decomposed each sentence from the training corpus into a collection of $w$-grams that represent

consecutive words.

The input to the network is a vector that has two blocks: context and

target. The target block is a one-hot

encoding of the middle token in the $w$-gram, and the remaining tokens in the $w$-gram are placed in the

context block as a bag of tokens.

An unsupervised learning algorithm

Individual KCs Explorer

In the demo, the user can select individual neurons from the group of KCs and explore the strength of synaptic weights connecting the selected KC to the PNs. Each PN corresponds to a token from our vocabulary. The larger the synaptic weight, the stronger that token contributes to the excitation signal to the given KC and the bigger (relatively) it appears in that KC's word-cloud. For every KC, the strengths of incoming synapses from the PNs are passed through a softmax function and the twenty strongest inputs are additionally displayed as a histogram.

Consider, for example, Neuron 75. The top three tokens activating this KC correspond to the words: disease, disorder, patients. It is clear that this neuron has learned a concept associated with medical conditions.

Another example, Neuron 353 has the top tokens: governments, authorities, state, as shown below. Thus, it has learned

a concept of authorities of various levels. It is clear, however, that this concept is not pure. For

example, the fifth strongest token here is <NUM> which

represents

a number-token. Perhaps this is not surprising given that a number token can easily

appear in the training corpus quantifying, for example, a number of law enforcement officers. Another sign

of entanglement of concepts for this KC is the token news. While it is not a

systemic authority, this word can easily appear in the context of government since this topic is often

discussed in the news.

Response to a Query Sentence

In the second part of the demo, we explore the activation patterns of neurons in the fruit fly network in response to an input sentence. Our model encodes the query sentence as a bag of words and interprets it as the activity of PNs. The recurrent network of KCs coupled to the APL neuron performs the calculation and outputs a pattern of excitations across KCs so that only a small number of KCs are in the ON state, while the majority of them are in the OFF state. This pattern of excitations can be converted to the hash code for the query sentence, which is used for the evaluations of our algorithm (see the paper).

For a given input sentence, our demo returns the word-cloud visualizations of words strongly contributing to the excitation of the four top activated KCs. The synaptic weights learned by these KCs are used to generate the probability distributions of individual tokens by passing the weights through a softmax function. These probability distributions are visualized as word-clouds.

Consider the example sentence:

Entertainment industry shares rise following the premiere of the mass destruction weapon documentary.

This query sentence activates several KCs. The demo shows the word clouds of tokens learned by the four highest-activated KCs. In the inset of each word cloud, one can see the strength of the activation of that specific KC (highlighted in red) compared to the activations of the remaining KCs (shown in gray). The top activated KC has the largest weights for the words: weapon, mass, etc. The second-highest activated KC is sensitive to the words market, stock, etc. This illustrates how the fruit fly network processes the queries. In this example, the query refers to several distinct combinations of concepts: weapon of mass destruction, stock market, movie industry. Each of those concepts has a dedicated KC responsible for it. However, the responses are not perfect. In this case, we would expect to have the KC which is responsible for the movie industry concept to have a higher activation than the KC responsible for the types of weapons of mass destruction. But, overall, all the concepts picked by the KCs are meaningful and related to the query.

The user can query the fruit fly network with different sentences. We have observed that our network works best on queries that are taken from news articles (preferably with political or financial content). Presumably, this is a result of the particular corpus that was used for training. We have included both good examples (denoted by ✅ ) where the responses of the network are reasonable, as well as bad examples (denoted by ❌ ) where the network fails to identify the relevant concepts.

Summary

In this work, we asked the intriguing question of whether the core computational algorithm of the network of

KCs in the fruit fly brain — one of the best studied networks in neuroscience — can be

repurposed for learning word embeddings from text, which is a well defined machine learning task. We have

shown that, surprisingly, this network can learn correlations between words and their context while

producing high quality word embeddings. Our fruit fly network generates binary word embeddings —

vectors of [0,1] as opposed to continuous vectors like GloVe and Word2vec — which is

useful from the perspective of memory efficiency. The boolean nature of the FlyVec word embeddings also raises interesting questions related to

interpretability of the learned embeddings. As we have illustrated in this demo, some of the individual KCs

have learned semantically coherent concepts. For example, we could ask the question, “Does the query

sentence pertain to a medical condition?”. If the answer is positive, it is likely that the Neuron 75,

discussed above, will be in the ON state for that query. Thus, individual KCs may encode a logical way to

detect the presence or the absence of certain attributes of the words and query sentences. We hope to

systematically investigate this intriguing aspect of interpretability FlyVec embeddings

in the future.